OverExpressed

OverExpressedDemystification is a critical part of education

I firmly believe that most anyone can be taught most anything, if given the right resources. I’ve stated before that the future of education will be very personal, and there have been a number of recent reports supporting this trend. However, there’s still a key hurdle that we frequently underestimate: mist.

When I say mist I’m referring specifically to the foggy notions that lead someone to believe a concept or activity is beyond them. This unfamiliarity with a topic obscures one’s motivation and ability to even begin tackling it. You become paralyzed by a debilitating lack of confidence that is all too often instigated by those who are best positioned to alleviate it.

Of course, many people likely throw up intellectual barriers simply to boost their egos. When I use big words that you don’t understand, I point out how much smarter I am. Math is hard. This machine looks complicated. You can’t wiggle a stick around in just the right way to get a manual car to move properly, that takes years of experience*. Too many people throw up verbal barriers around the knowledge they’ve gained, as though they had to protect it in some sort of zero sum knowledge game. But knowledge isn’t scarce. There’s plenty to go around, and it should be shared as freely and openly and empathetically as possible.

While I’d hope my personal encounters with this phenomenon have been isolated incidents (the ivory tower seems to breed mist-makers), it’s unfortunately a pervasive annoyance. There are mechanics, doctors, politicians, and pretty much any kind of superior with fiscal or egotistical motivations. Mist is basically the tag line for Apple. Users can’t do anything to modify or upgrade their iCrap on their own. Kids are taught that they need a certified “Genius” just to help them change their batteries. Little boxes of circuits are “magic”. No, Mr. Jobs, they aren’t magic. Dragons are magic. Those are just well-understood tangles of wires. And mystifying them is bad for America. If you can’t open it, you don’t own it. You won’t always find me singing the praises of Microsoft, but it’s interesting to compare them with Apple in this respect. Windows-powered PCs seem built for modding and tweaking. In fact, Windows 7 was even my idea. The difference between these two approaches to customer respect is pretty glaring</rant>.

Clearing the Mist

Fortunately, there seem to be a number of anti-mist movements gaining momentum recently. Wikipedia brought a vast expanse of knowledge to everyone’s fingertips. Now you could find some ground when bullshitters tried to pull it out from under you. A long line of cheap or open source textbooks and video lectures promise to bring even richer learning experiences to everyone (although these both fall short of the ideal education system of the future). JOVE and Instructables have also built themselves on the idea of opening up knowledge in a more visual way. Aardvark and Quora have built businesses on providing users with immediate access to expertise, allowing you to bypass information-hiders with murky motives.

These kinds of tools, largely enabled by the internet, have helped accelerate a number of do-it-yourself (DIY) movements, including one of my personal favorites, DIYBio. It’s important for these initiatives to engage in early education and outreach to remove barriers for kids. They can do science. They can build a radio. They can replace their own batteries. I definitely wish I had been exposed to more hands-on work at a younger age. I’ve seen this become an issue with many grown-up people who are constantly held back just because certain topics or tasks are a little bit mystical to them. In fact, I bet 80% of inaction could be overcome with some simple demystification. And that’s part of what this blog is all about.

<postscript>

*Ok, maybe that was part of the impetus for this post. Some people told me driving stick would be too hard and I couldn’t master it in a week, let alone 15 minutes. I showed them. And I did it driving on the wrong side of the road! Take that, mist!

Continue Reading | No Comments

Tags: barriers, commentary, education, learning, Open source, opinion, outreach

My problem with gmail and buzz – they broke RTM

Ok, I’m a huge fan of gmail. And I’m actually pretty happy with buzz as it’s opened up conversations with people I don’t normally interact with via standard web-based social networks. It’s also great how quick Google is to implement enhancements to these products. But this has led to one major issue I’ve had with google over the past couple of years – their updates frequently break third party plugins that leverage google’s API’s.

<rant>

Of course I wouldn’t necessarily expect Google to work hand-in-hand with every single third party developer, but they should at least keep some major ones in the know. In this case I’m referring to Remember the Milk. One of the most useful applications I’ve encountered on the web is the Remember the Milk plugin for gmail. For those who aren’t in the know, RTM is a simple task manager that lets you easily add tasks by typing things like “Send in rent check Thursday”. But really there isn’t anything that special about RTM when compared with the vast array of competitors (PhiTodo, Taskbarn, Remindr, teamtasks, Task2Gather, TheBigPic, Producteev, Basecamp, Planzone, and LifeTick, to name a few). In fact, RTM really disappoints when it comes to organizing and planning big-picture goals (TheBigPic and LifeTick are both much better for this). But the key redeeming feature for RTM is the gmail plugin.

The RTM gmail plugin is incredible. It is one of the few utilities that I absolutely rely on to remain organized on a day-to-day basis (others include gmail and gcal). And the key feature of the gmail plugin is that you can simply star an email and have it automatically become a task in the RTM side panel. This is extremely helpful for keeping up with emails. I can prioritize and postpone responses by relevance, and clicking on the task in the side panel will pull the relevant email up so I can address it right away. These features are especially critical since probably 70% of my tasks come as emails.

Google Broke RTM

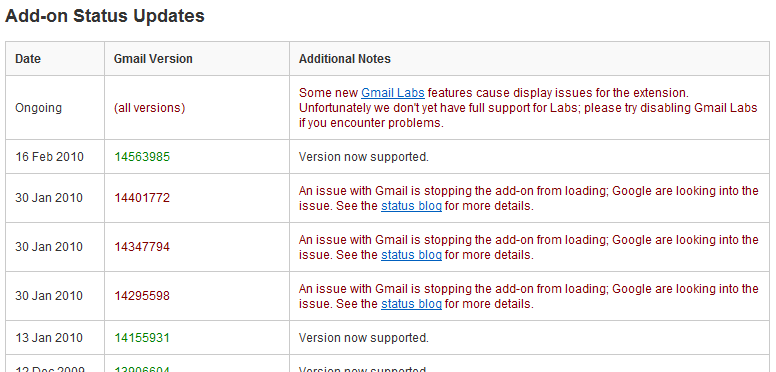

However (and this is where my beef with gmail comes in), Google frequently updates the code for gmail, and many times that actually breaks features in the RTM plugin (with over 90 instances of a break and a required RTM fix!). It’s generally only a relatively small break for a day or so. But for for the past three weeks (up until late Monday night), the RTM plugin has been completely down. This is, of course, because of Google’s wide-spread updates and code freeze associated with the release of Buzz, though we had no way of knowing that when the issue first cropped up in the RTM forums.

So it’s mildly frustrating relying so heavily on a plugin that is at the mercy of every minor update pushed by Google. I’m hopeful that Google will try to work more closely with RTM in the future. Or maybe they could improve their own native Tasks application to bring it on par with RTM’s other features (though that’s obviously the more monopolistic solution).

</rant>

Anyway, I guess the main take-aways here are:

- Buzz is awesome, but Google needs to play nicer with third party applications, especially as we become more reliant on these cloud services for day-to-day work.

- You should try out the RTM Gmail plugin (make sure to use the actual Firefox or Chrome addon, the labs gadget isn’t even close). I don’t know how I kept track of things before it. I’ve definitely had trouble keeping up with tasks over the past three weeks while it went dark (sorry to everyone for that).

Continue Reading | No Comments

Tags: Google, Google Chrome, Mozilla Firefox, Remember the Milk, Tasks

Stuck in Android Limbo

I spent over two years using a fat clamshell phone with glittery rainbow and penguin stickers on it. This would not be surprising if I was a 14 year old girl. But I was that girl’s older brother. Aside from the stickers and clunky interface, the phone only had space for approximately 5 text messages (making me constantly have to delete messages to get new ones). However, the camera seemed to miraculously circumvent this limitation, as evidenced by at least 200 dark photos taken of the inside of my pocket.

I spent over two years using a fat clamshell phone with glittery rainbow and penguin stickers on it. This would not be surprising if I was a 14 year old girl. But I was that girl’s older brother. Aside from the stickers and clunky interface, the phone only had space for approximately 5 text messages (making me constantly have to delete messages to get new ones). However, the camera seemed to miraculously circumvent this limitation, as evidenced by at least 200 dark photos taken of the inside of my pocket.

The reason I subjected myself to this is not for some kind of comedic irony (as a known technophile, regularly comparing my Ericcson brick phone with the circle of iPhones I am generally surrounded with), but rather because I just didn’t see any phones come out during those two and a half years that were worth investing in. And I’m not just talking about a financial investment (though that is significant as well). There are also mental and temporal investments. I have to learn how the phone works, get used to its strengths and flaws, and set everything up just how I want it. On top of that, I have to invest time learning which apps are best and getting them to work properly. And up until recently, I didn’t see any phone that was worth the money and effort for me.

The Rise of Android

My labmates seemed to think this was the most appropriate message for my birthday cake. Clearly they know me very well.

So what changed? Google launched a mobile operating system. This was a game-changer for me. Before getting into why Google’s phone OS is awesome, let me just take a moment to clarify just what Android is and isn’t (you can skip this if you know more about technology than 80% of my friends apparently do):

- Android is an operating system, like Windows or OS X on computers, but designed specifically for use on small devices (such as phones or even microwaves). It will probably be turning up on a lot more devices in the near future.

- Android is open source, meaning anyone can download the full source code and modify it for free. This makes it attractive for hardware manufacturers as it increases their overall margins on a device. This is comparable to what is happening with open source operating systems on computers (like Ubuntu or Google’s Chrome OS). However, while computer operating systems have been developed for decades, advanced smart phone operating systems are still at the nascent stages of design and features offerings. It’s thus much easier for an open source alternative to actually compete and grab market share from established systems much faster (people aren’t as committed to phone operating systems as many are to Windows, for example).

- Android is not a phone made by Motorola and sold by Verizon. The Droid phone runs the Android operating system, but the phone itself is not made by Google. An analogy would be a computer (phone) made by Dell (Motorola) that runs the Windows (Android) operating system.

Ok so now we’re on the same page. But why is Android so awesome? In my opinion, Android kicks ass because:

- It’s open | This has a few advantages. First, it’s free (ultimately reducing the bottom line on phones). But beyond that, it is able to grow with fairly rapid development cycles. Additionally, third party software is not regulated like it is on some fascist phones, leading to more creative and compelling applications. Shady policies surrounding software moderation are even driving developers to quit the iPhone.

- It unifies my phone-to-phone experience | It used to be that the only thing you cared about carrying over from one phone to the next was your contacts (and even this wasn’t a given – hence the many requests you see from people who have suddenly lost their address books). But now you’d like to switch to a new phone and be able to easily port your contacts, photos, text messages, applications, and other settings. If you lock yourself into a walled operating system and at some point you want to move to a cooler phone with a different OS, you’re basically out of luck. But since Android is being implemented on tons of phones now, from every carrier, you can readily jump from handset to handset while preserving your basic user experience and data. Google even plans to integrate a settings backup/restore feature to be implemented in the near future.

- It’s backed by Google | And Google is awesome. They encourage optimal user experience in an array of applications. Almost every important application I use these days is improved by Google’s forward-thinking designs. And although some people are concerned about Google owning too much of our digital lives, I personally believe Google doesn’t intend to do any evil, and they’ve made some good strides for openness and data portability. Google is definitely in a strong position to revolutionize the mobile phone industry.

- It is well-designed | Sure, some of it is just eye candy, but the Android interfaces are really aesthetically pleasing and generally intuitive to manipulate. It also facilitates an unprecedented amount of customization of user experience. It’s an efficient and enjoyable OS to interact with.

The Perfect Storm

Yet, even with all of the clear benefits of moving towards an Android operating system, I passed on the earlier iterations of the G1 and MyTouch. It wasn’t until the HTC Hero launched that I was finally ready to make my investment.

The launch of the Hero was regarded by many as the first real competition for the iPhone in terms of performance and functionality. While previous Android phones were still a bit buggy and suffered from issues with battery life, speed, and basic functionality as a phone, HTC really built an excellent phone here. This phone also marked the introduction of HTC’s Sense UI, which is basically a modification of the Android OS to improve the user experience with some additional customization options. All in all, the hardware was finally there.

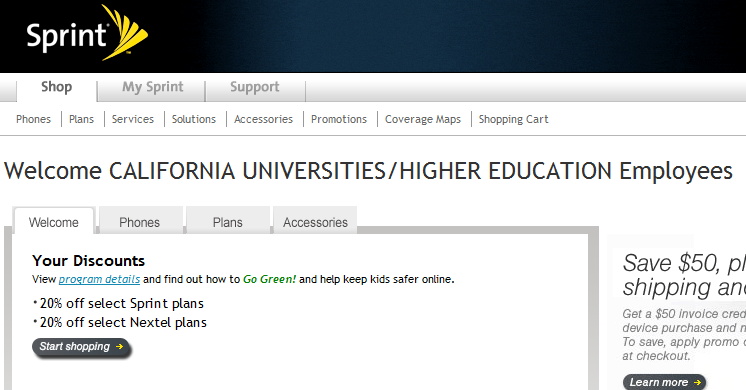

On top of this, I was surprised to find how appealing the actual carrier was in this instance. The Hero was the first Android phone launched on the Sprint network in the US. I had always used AT&T as my carrier (except for the brief period when it became Cingular and then eventually reverted back). And Sprint was actually really cheap! From some basic searching online, I discovered Sprint will give a discount to pretty much anyone with an organization email address. You just enter your email address at this site and they’ll send you a link to your “Private Sprint Store”. The specific rates vary, but for Berkeley this amounted to a 20% discount on plans! So this made the $69.99 unlimited plan (unlimited calls to any phone, unlimited data, and unlimited texts) just $56/month (about half what it would cost to get a similar plan with AT&T or Verizon). On top of that, I’ve anecdotally found the Sprint network to be really dependable in the areas where I’ve used it (Berkeley, San Francisco, Boston, Maryland, DC), and I seem to get better coverage than I used to with AT&T. So this helped push me over the edge with the Hero.The Downside

So it’s about time I get to the title of this post. There are a few issues I’ve run into with this phone, but perhaps the most significant one is that of OS branching. Basically, since Android is open source, third parties can modify the core code that Google originally wrote to add specific functionality. This is like particular distributions of Linux that are modified to function/look a certain way (such as Ubuntu). In fact, Android itself is a particular distribution of the Linux kernel. So in the case of the Hero, HTC has modified the core Android code to implement it’s “Sense” UI. This has likely improved the user experience, though I haven’t played with other android phones (but I have friends using the Motorola Droid who seem to like my phone better specifically because of this UI enhancement). The downside is that now I have a very special version of Android that has to be specially modified any time there are core upgrades to the Android OS (which have been coming rather frequently with the a new version seemingly released with each new phone).

When I got the Hero, the most up-to-date version of Android was 1.5 (also known as Cupcake, in Google’s line of dessert-inspired names). It was very quickly upgraded to 1.6 (Donut), but I wasn’t able to make this upgrade on my phone because of the modifications HTC made to the OS (without rooting my phone, voiding warranties, etc.). Sprint announced an upcoming upgrade to 1.6, but then the Droid came out with 2.0 and the timeline for release has gradually moved back to the point where Spring has now said we’ll skip 1.6 and jump straight to 2.1, sometime in the first half of 2010. That could be June. And by then, 2.5 or 3.0 might be released. So the upgrade cycle definitely lags thanks to OS branching.

Hopefully these sorts of delays will be reduced by adjustments to the core Android platform in the future (and as more phones offer the “full” Google experience), but for now you just have to be satisfied with the phone as it is when you buy it, knowing its OS could be obsolete in a matter of weeks. And I’m definitely satisfied with my Hero, so Android limbo isn’t so bad afterall.

Tags: Android, Google, HTC Hero, Open source, Operating system, Phone

The Future of Education

The most depressing class I ever took was freshman intro chemistry. Granted, it was advanced intro chemistry (oxymoronic, but that’s how the course identifications work at MIT; extra numbers = harder, extra letters = easier). So this was 5.112 (as opposed to the standard introductory 5.111). So why was it so depressing? I had learned the majority of this stuff already in my high school AP Chemistry course. I had actually done rather well in the chemistry class, finding most of the material to be quite manageable and I scored as well as you can on the AP exam. That’s obviously not the depressing part, though.

The most depressing class I ever took was freshman intro chemistry. Granted, it was advanced intro chemistry (oxymoronic, but that’s how the course identifications work at MIT; extra numbers = harder, extra letters = easier). So this was 5.112 (as opposed to the standard introductory 5.111). So why was it so depressing? I had learned the majority of this stuff already in my high school AP Chemistry course. I had actually done rather well in the chemistry class, finding most of the material to be quite manageable and I scored as well as you can on the AP exam. That’s obviously not the depressing part, though.

The sad part came when I realized I had forgotten a significant amount of the material I had mastered only a couple of years earlier. Moreover, I was finding the material even harder the second time around. This made me come to two harsh realizations:

- My high school instruction was better than the equivalent MIT instruction for this particular course.

- I can forget something pretty quickly, particularly if I’m not using it regularly.

This really marked a turning point in my education. For the first time, I felt the frustration of viewing previously familiar material with virgin eyes. Realizing how short-lived any particular piece of knowledge could be, I decided I would no longer sweat the small stuff. I didn’t kill myself memorizing and practicing things that I didn’t find interesting or relevant for my near-term future. I grabbed the big picture, and delved in deeper just where I felt like it. As a result, MIT was a very pleasant experience for me. Unlike my indiscriminately intense study habits in high school, I decided to focus on just the parts I cared about, knowing that I’d have to relearn anything that I needed to actually use in the real world.

This obviously is not efficient. Both the impersonal method of instruction, and the (effectively nonexistent) means of knowledge retention in the current system leave quite a bit to be desired. So what will education look like in the future?

Education will be Personal

This is what we were all told in third grade while taking those silly tests to determine our learning style. I probably leaned more towards the visual/reading side of things, and secretly questioned the validity of “kinesthetic” learning, but it didn’t really matter anyway. These tests never had a real impact on anyone’s academic pursuits. Education is still done one batch at a time, with everyone receiving the same content. At best, some instructors mix teaching styles with some overlap in order to bring as many students along as possible (link), perhaps incorporating hands-on experiments, group discussions, and visual effects into a standard lecture. But this isn’t the ideal solution. When I say personal, I mean really personal.

Every student brings two wild cards to the education table: their learning style, and their current knowledge. Now those are some pretty huge frickin’ variables, and I’d argue the latter is most important. Yet, students are all presented with the exact same material within a given batch, at best receiving some sort of “refresher” or “catch-up” material. This can’t possibly fill all of the cracks. So you end up with a good percentage of students trying to learn new material on a foundations that is full of gaps. Not the best structural engineering approach. Especially considering you can’t really teach someone anything unless they almost already know it (forgot who said this – anyone know?).

Learning is incremental and it’s nearly impossible to really grasp new material before fully understanding the concepts preceding it. This is partially why I much prefer to read a whole textbook from cover to cover rather than receive whatever bits and pieces my teacher chooses are important enough to cram into an artificially-imposed academic calendar. Courses need to be personal not just to the student, but also to the material. Students should accomplish work at their own pace, with recommended windows for milestone completion to help motivate them along. The key is to always be progressing and retaining what you are learning, not necessarily to move faster than everyone else. Regular assessment of understanding would be integral, allowing students to review any modular subjects they might be lacking.

As an intermediate step, video lectures will become much more popular, and we’ll eventually see the “best” Physics 1 lectures rise to the top. This is already happening, with recent studies showing that 82% of students at University of Wisconsin-Madison would rather watch video lectures, with 60% saying they would even be willing to pay for those lectures. Their reasons generally were linked to a more personal experience (watching lectures “on-demand”, making up for missed lectures, etc.) But eventually, the standard lecture format will have to give way to more interactive media that tests and reinforces throughout the teaching process.

Interactive Learning will Take Over

While working at Lawrence Livermore National Labs, I took a few online training courses that involved interactive material. The interfaces definitely weren’t ideal, but they were a step in the right direction. Users were presented with flash-based tutorials followed by simple quizzes to reinforce key topics, with some navigation controls to help with reviewing material that was not adequately retained.

Imagine how much further this could be pushed. You could open up a video of a lecturer speaking, with interactive tutorial elements playing on the side. These could be standard graphs, figures, and videos, or more complex boxes taking in user inputs to produce simple visualizations that explain a concept much better than the waving of a hand or the scratching of chalk. MIT has some of this kind of content associated with their courses, but it’s definitely not as well-integrated into individual curricula as it should be. It’s fairly clunky to have to go back and search through a list of visualizations when you’re first learning (or subsequently reviewing) a topic.

So now you have a student immersed in an interactive lesson, maybe even taking advantage of some new Minority Report-style interface tools being developed by a few companies. Throughout the process, students can be prompted with questions to confirm they are grasping a concept before moving onto the next one. In large lectures, this doesn’t happen. If you get lost somewhere, you remain in the dark for the rest of your miserable time there. You could ask a question, but that’s a fairly inefficient solution in a large room of students where many people are not lost. But with gradual questions integrated throughout the process, it’s easy to identify any stumbling points. The software could even be smart enough to break a question down into component concepts, asking a second series of questions, and a third, and a fourth, and so on, until the root problem area is identified. The student can then review that area until he or she is ready to return to the work at hand. That’s real no child left behind.

Obviously there are some subjects that are more readily amenable to this new education platform. Mathematics, language, and the sciences are all excellent candidates. Some components of humanities education could also be addressed, with some modification. Writing might use peer-based editing and assessment (much like many writer groups that are being formed online today). Artistic and physical instruction can also be addressed with a range of new input devices. The Wii is great for a lot of things, and there’s finally an educational game for guitar hero with an actual guitar. These kinds of devices could eventually be integrated into a complete, interactive learning environment that is much more personalized than anything that could be offered in batch classroom settings.

Optimized Review will be Critical

A number of studies have emphasized the value of spaced repetition for memory retention. The basic idea is that, after you’ve learned something it is very easy to remember upon review the next day. Then, as time goes on, it becomes harder and harder to remember until you have no clue what it was anymore. It turns out it’s probably optimal to review this material right before you’re about to forget it. With spaced repetition, you review the concept at optimally-designed intervals to make sure you never forget the concept, with minimal time expenditure. A number of companies have been developing software to help push spaced repetition, but it has mostly been limited to desktop applications with flashcard-style learning (great for language, but sub-optimal for most other things). Smart.fm has received quite a bit of press lately for applying these strategies in a simple, web-based platform.

The successful integration of optimized review right into the learning process will have a huge impact on education, helping students to actually remember most of what they’ve been taught, and saving all of the wasted time catching everyone up at the beginning of every new semester.

It will be Cheap and Ubiquitous

Right now I’ve got a phone in my pocket that is more powerful than the average computer was a few years ago. And on that phone I have access to millions of bits of absolutely free information, anytime and (almost) anywhere.

Of course many people rely on Wikipedia as the trusted source for a first go, but expert-produced content is making its way into the free space. There are a number of sources for free video lectures, including my personal favorite. An MIT alumnus has even started a Youtube channel that offers comprehensive instructional videos on everything from chemistry to differential equations to banking (1000+ videos!, thanks for pointing it out, Jamie). And recently, open source textbooks have gained some momentum with Flat World Knowledge and Wikibooks. Our very own Governator even pushed an initiative this year to get open source textbooks in high school classrooms throughout California, with the hope of ensuring high-quality and affordable education for everyone.

Ultimately, I believe educational content will become extremely cheap or free. But I don’t just mean video lectures and textbooks. I’m talking about entire educational programs with web-based content that’s optimized to improve students’ learning. Initial investments developing these programs will pay off as they drastically reduce many other economic burdens imposed by the traditional education industry.

And being web-based, these tools will be available to everyone with an internet connection, a number that is continuing to grow. Phones could even be used for rapid review of appropriate content. You could run through some vocab or quick math problems while waiting in line. And it could even become addictive if presented in a game format. The popularity of educational games on devices such as the Nintendo DS demonstrates that people are both willing and eager to apply their brains to constructive problems in gaming environments. So education will be cheap, everywhere, and addictive.

But Classrooms Still Have a Place

Moving to an entirely digital education would obviously have some terrible repercussions for social development. From the beginning of my time at MIT, I realized the reason the place was special wasn’t because of any fancy machines or brilliant lectures. It was special because of the connections you could make with some really amazing people. They used to say that at MIT there are three things that take up your time: Sleep, Social, and Study. You can only choose two. Anyone in my freshman dorm can tell you that Social ranked pretty highly for me, with Sleep taking a bit of a back seat.

Although some peer discussion could take place online, students will need actual human interaction to prepare them for the inherently collaborative nature of modern working environments. That’s why these tools would largely have to be an enabling supplement for higher-level discussions and projects in a classroom setting. Students could complete 80% of the learning on their own, with teachers and parents monitoring their progress via web interfaces. Then they could go to class knowing they have something substantial to contribute to bigger, more integrative goals.

So when do I get my robo teacher?

I’ve primarily been discussing ideal education systems for the future, but what’s practical in my lifetime? The biggest issues may lie in the fact that education is a huge industry. And like any huge industry, it has a lot of inertia that will take time to adjust. AcademHack has a great video presenting the issues our outdated “knowledge creation and dissemination” system will face in a modern, connected world. There are going to be some tough growing pains, much like we’ve seen with the recording industry, the film industry, television networks, and publishers. But clearly a lot of changes have to be made to reach an optimal system.

These tools will likely be implemented on a more individual basis in the near-future, as supplements to traditional schooling. However, it’s clear that our nation is moving towards efficiency by technology and personalization. We’ve been trying to get away from batchucation (coining a term – education in batches), and we finally have the web-based tools to make it happen. I’m excited to start using some of them to finally refresh all of the material I’ve inevitably forgotten.

Continue Reading | 11 Comments

Tags: education, Education reform, High school, MIT, teacher, Technology

The Scientific Journal of Failure

Science is riddled with failure

Really, it’s all over the place. It’s built right into the scientific method. You make a hypothesis, with a firm understanding that anything could happen to disprove your faulty notions. Sometimes it works and you see what you expected, and sometimes it doesn’t. And some of the most interesting discoveries of all time have come from these “accidents” where researchers stumbled on something that didn’t work how they expected it to.

However. The majority of these scientific snafus result in absolutely nothing. You just blew 16 hours and $3,000. Gone. But at least now you know that mixing A & B only produces C when you have the conditions very precisely set at D. But what happens to all of your failed attempts? Your (very expensive) failed attempts?

They disappear. There is neither forum nor incentive for researchers to publish their failures. This leads to an enormous amount of redundant effort, burning through millions of dollars every year (many of which are funded by the tax-payer). The system sounds really inefficient, right? So why do we do it?

People tend to focus on successes. Sure, things didn’t work out 6 or 7 or 17 times…but then one time it all came together. And then you go back and repeat every single obscure condition that led to your ephemeral success (even wearing the same underwear and eating the same breakfast – but maybe I’m just more scientifically rigorous than most). And what do you do? You manage to repeat the success and you publish it. Maybe you publish a parametric analysis showing how you optimized your result. But you have left a multitude of errors and false starts in your wake that will never see the light of day, leaving countless other researchers to stumble into the very same unfortunate pitfalls. It’s a real waste.

But this protects you. In the business world they call it “barriers to entry“. You’ve managed to find yourself racing ahead in first place on something and the only thing you can do to protect yourself is drop banana peals to trip up your competitors (fingers crossed nobody gets a blue turtle shell). On top of that, every time you publish something, you’re putting your reputation on the line. A retraction can be devastating, particularly for an early career. Why take such a risk just to publish something that doesn’t even seem very significant? It doesn’t help you any. But is this good for science? Of course not. You’re delaying progress. You’re wasting money. And, particularly in the medical sciences, you’re probably actually killing people.

So Let’s Document The Traps

I propose a journal devoted entirely to failures. Scientists can publish any negative results that are deemed “unworthy” of standard publication. Heck, we can even delay publication by 6-12 months, to give the authors a little head-start on their competitors. And there would have to be some sort of attribution. But nobody wants their name attached to The Journal of FAIL. So let’s call it The Scientific Journal of “Progress”. Maybe without the quotes.

I wasn’t sure if such a journal existed already (though if it does, I certainly haven’t heard of it – and I’d probably be one of the biggest contributors, right behind this guy). The only close option that came up was the Journal of Failure Analysis and Prevention, but they seem to be focused on mechanical/chemical failures in industrial settings. So the playing field is still wide open.

Imagine if every experimental loose end was captured, categorized, and tagged in an efficient manner so that any person following a similar path in the future could actually have a legitimate shot at learning something from the mistakes of others. It would be kind of like a Nature Methods protocol, but actually including all of the ways things can go wrong. Authors could even establish credibility by describing why certain conditions didn’t work (a very uncommon practice among scientists). We might have to develop some technological tools that automatically pull and classify data as researchers collect it, making it a simple “tag and publish” task for the researcher. And people could reference your findings in the future, providing you with even more valuable street cred for the entire body of work you have developed (not just the sparse successes).

Of course none of this will happen any time soon. There simply isn’t enough of a motivating force to drive this kind of effort (besides good will). Maybe one day science will get its collective head out of its collective ass and things will change. And this will be one of those things. In the meantime, I will do my best to record and post tips on avoiding my own scientific missteps.

BONUS WEB2.0 TOOL: TinEye

[you’ve read this far, you deserve a reward]

I originally did a google search for “Science Fail” and found the first image of this post on another blog. I was curious about the actual origins of the image, so I used the TinEye image search engine to find the real source. Tineye is just like any other search engine, except your query is an actual image. You just upload (or link to) an image of interest, and TinEye scours the web for any similar images (actual pixels, not metadata). I quickly found that the picture originally camed from a “Science as Art” competition sponsored by the Materials Research Society (MRS). TinEye searched over 1.1011 billion images in 0.859 seconds to find that result. Pretty nifty, right?

The obvious applications are for controlling the distribution of copyrighted images, and there are some potentially frightening applications of such technology for facial recognition in the not-so-distant future (imagine someone sees you on the street, snaps a picture surreptitiously, and does a quick image search to find out who you are – and they’re likely to find out everything). Scary. But still kind of fun.

Tags: Image Search, Medicine, Publications, Scientific Journal, Scientific method

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=ef39fbd9-3203-495d-a887-659c326b628e)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=a8e2bd31-522e-4e50-8e08-2301c05f2d9a)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=22a873ff-ee12-4134-afd1-4d796bc51c88)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=cb61c8af-10cb-4b74-b0d9-9a2b7eb440a9)